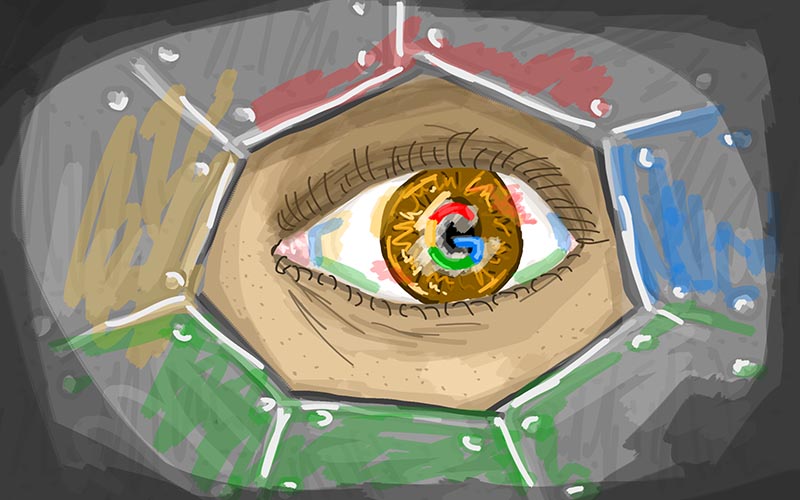

Joe Toscano’s book Automating Humanity is illustrated with visions of two worlds: one a cold, corporate wasteland, where the ubiquitous iconography of companies like Facebook, Google, and Apple looms large over their slavish users; and the other a warm wonderland, where technology and nature have come together in miraculous ways, allowing for off-planet farming and living architecture. The images are beautifully rendered by graphic designer Mike Winkelmann, and they evoke the fork in the road that Toscano suggests technology presents us with — to heaven or hell.

A former consultant to Google, Toscano’s goal in Automating Humanity is to lay bare the current tech-related issues facing society, like technological addiction, disinformation, and automation-driven mass unemployment. The book is an interesting read from an industry insider, but where it really suffers is at the author’s suggestions for how we can course-correct to reach a better future. His solutions rely far too much on government regulation, which — with trust in government reaching historic lows in the United States — seems wholly inadequate. Indeed, Automating Humanity fails to address the most promising development in the industry: that of workers at companies like Google, Uber, and Microsoft organizing themselves to oppose their employers. If change is coming, it will require tech workers to break ranks with their bosses — something that an ex-Googler like Toscano must know.

I recently spoke with Toscano about Automating Humanity, the excesses of modern technology, and worthwhile cures. Our conversation has been lightly edited for clarity and brevity.

Arvind Dilawar: How did your experience working for Google make your perspective on technology more critical? Was there a moment that “opened your eyes,” so to speak?

Joe Toscano: I had always been aware of various issues in the technology space. I actually got into tech with the intent to help make change. I’d say what working with Google enabled me to do was experience these issues at scale, see how they’re handled, and understand how to make systemic change.

There were several moments throughout my time there that raised my concerns, but I’d say the biggest was just watching the system and realizing that we were all automating our own jobs away. I know I’ll be fine because I’m a technical individual who has the skills to adapt in the modern world, but I know there are billions of people who are not and they will get wiped away by the tsunami that is the upcoming wave of automation.

I believe this is the future. It’s just simple economics, but I believe there has to be some responsibility to prepare the world for this. Otherwise, we’re going to see mass unemployment, which, I believe, will lead to mass uprisings of the general population. I didn’t feel taking a six-figure salary was worth taking part in being responsible for it, and I felt that I would be better served to prepare our world for this new world.

AD: Of the problems that you describe in Automating Humanity, is there one in particular that you are most worried about?

JT: I’m most concerned about automation-driven unemployment, so much so that I even gave a TEDx about it called “Want to Work for Google? You Already Do.” However, bringing this issue to light is very difficult because automation also makes our lives better in many ways and most people aren’t concerned about discussing why it’s bad until their life is immediately impacted. Unfortunately by then, it’s too late. So how do we proactively discuss this? That’s what I spend every day trying to figure out and is a big reason why I wrote the book — to enable a larger discussion.

AD: When it comes to automation and unemployment, are there facets of the issue that you feel aren’t getting the coverage they deserve?

JT: I believe that the media generally doesn’t understand the fact that data can be — and increasingly is — collected on everything we do and that as soon as we have a reasonable amount of data in any area, we can turn that into machine intelligence. It’s a very hard concept to describe, but it’s as simple as systems like ReCaptcha being used to train machine vision. There are all these little trigger points across the internet of things that at their immediate engagement don’t seem troublesome, but when combined with other data points, that may or may not be collected in tandem, can come together to make much greater threats to humanity.

I’m not running scared to the backwoods because of it — although it very well could head that way if we don’t do something about it. It is very frustrating that the media is not interested in covering a topic until it has a damaging impact on society. Once this stuff hits critical mass, it’s too late. Once it reaches that point, you can cover it all you want and the efficiencies in the world brought on by automation won’t be going away no matter how many blog posts are made. It’s a simple matter of economics.

Just remember: Horses were much better at jumping over streams, providing warmth and love, and many other things that cars are not. But cars were better for getting us from point A to point B. Will humans become the horses of the future economy? I think if we leave it up to media coverage alone, we will.

AD: Many of the course-corrections that you suggest in Automating Humanity rely on government regulation of technology. Considering that the relationship between governments and the people they supposedly represent is currently so fraught, do you still believe that’s the best way forward?

JT: I definitely understand your concern, and I don’t entirely disagree. But I do believe in a better future. And in that sense, yes, I still do believe that regulation will be required. However, I also realize the disconnect and the time it takes to move anything forward through our legislative branch. That’s why I’ve also created my company, the Better Ethics and Consumer Outcomes Network (BEACON), where we work with companies to proactively identify these problems and translate them into positive-sum business solutions — opportunities to improve both the consumer experience and the business’ bottom-line. Our goal is to move these issues forward on business terms, while also helping regulators figure out the best way to create regulation that shapes a better future without destroying our ability to innovate.

AD: What kind of business-minded solutions do you think can address the issues that you bring up in Automating Humanity?

JT: To be honest, I don’t think any business solutions can address the situation. I believe this is an educational issue. I do believe we’re too far gone to try to stop automation from taking millions of jobs, but we’re not too far gone to educate the kids, who will then be prepared to participate in this modern economy, and we’re not too far gone from changing the law to put a wrench in the current system to slow it down.

I’m working to do that with BEACON. We leverage our insider knowledge to inform regulators, while we work day-to-day to change the operations of tech companies, then create change in the education world by leveraging our profits and talents to fund and aid in the development of educational programs. We are actively building out connections to these institutions as partners for BEACON. We’d love to be doing this work ourselves, but we’re best served using our internal corporate knowledge to push back technically. As we grow and become more solidified in our business model, our plan is to donate X percent of revenue and allow our employees to allocate five hours a week (one hour a day) to our partners.

AD: One subject that goes unaddressed in Automating Humanity is labor organizing in technology, which continues to gain momentum. What do you make of tech workers organizing to curb some of their companies’ worst abuses?

JT: There are definitely issues I wish I had more time and a higher page count to cover. At the end of the day though, this is a very large systemic issue that needs to be attacked from all angles if we want to see meaningful change and there’s no way we could spell out the entire solution in one book. So I’m thrilled to see more activation within companies and that is part of what I hoped for. I do, however, believe that our work as external advocates and luminaries enables the insiders to feel comfortable knowing that there is life on the outside and, in that sense, I believe our work complements their efforts, and vice-versa.

AD: Automating Humanity was published nearly a year ago. Since then, has there been one big development in technology that has confirmed the views you expressed?

JT: I believe that, if there’s anything that has confirmed my efforts, it is the fact that these issues are now being spoken about within the mainstream media, within regulatory bodies (through investigations and new boards), and within classrooms. That’s a very general answer, but to me, these are huge developments. It means the public is interested and concerned. And I know our work has been part of this.

Two and a half years ago, when I started this journey, speaking about these issues would have been either: one, totally ignored; or two, put me — and others doing similar things — at risk of losing our jobs or ever being hired again. This was a big concern of mine and something that actually led to a mental breakdown in the middle of writing the book — the fear of eliminating my own future opportunities. But I, as well as others, took the risk and now the sentiment is changing. The public wants to know more and is now prepared to support leakers trying to illuminate these issues. The media is helping accelerate those voices, and the government is starting to protect them from these companies’ backlash. These sound like very basic human rights issues, and they are, but the fact that the tide has turned on them makes a huge impact on the ability to make change.

AD: Is there one piece of advice that you would give to the average tech user? What should individuals be doing right now?

JT: Become informed enough to understand what boxes need to be checked at the ballot box in 2020, read Automating Humanity, then join the discussion. And don’t be afraid to ask questions about things you don’t know or don’t understand. Digital technologies, AI, big data — all these hot buzzwords make the information sound intimidating, but it’s not as big and scary as it sounds. We need more people in this conversation if we want to make the changes that need to be made. •